Updated on 2021-11-01

What is Logical Volume Management

LVM provides a more efficient method to manage disks on a system. The traditional method was dealing with primary and extended partitions. Under the control of LVM, disks can be grouped as one whole disk or one single storage. It can be resized and moved around in real-time without worrying about disks of different sizes or filesystems.

Understanding LVM

LVM consists of Physical Volumes, Volume Groups, and Logical Volumes:

Physical Volumes - Physical disks like /dev/sda, /dev/sdb must be initialized as a Physical Volume(PV). Each PV is divided into units, known as physical extents(PEs).

Volume Groups - Volume groups(VGs) are created by combining all the Physical Extents from Physical Volumes to create a pool of disk space, out of which Logical Volumes can be allocated.

Logical Volumes - Logical Volumes are created by using the disk space available in Volume Groups. LVs will be converted as dev-mapper devices, then formatted with regular file systems, such as EXT4, and then presented as a block device under /dev/mapper and /dev. LVs in /dev/mapper and /dev/vg/lg are symlinks to /dev/dm* devices, which are managed by the device-mapper driver. You can read more about here.

Here’s an example:

root@ubuntu-local:/dev/ubuntu-vg# ls -l /dev/mapper/ubuntu--vg-ubuntu--lv

lrwxrwxrwx 1 root root 7 Apr 29 07:40 /dev/mapper/ubuntu--vg-ubuntu--lv -> ../dm-0

root@ubuntu-local:/dev/ubuntu-vg#

root@ubuntu-local:/dev/ubuntu-vg#

root@ubuntu-local:/dev/ubuntu-vg# ls -l /dev/ubuntu-vg/ubuntu-lv

lrwxrwxrwx 1 root root 7 Apr 29 07:40 /dev/ubuntu-vg/ubuntu-lv -> ../dm-0

root@ubuntu-local:/dev/ubuntu-vg#

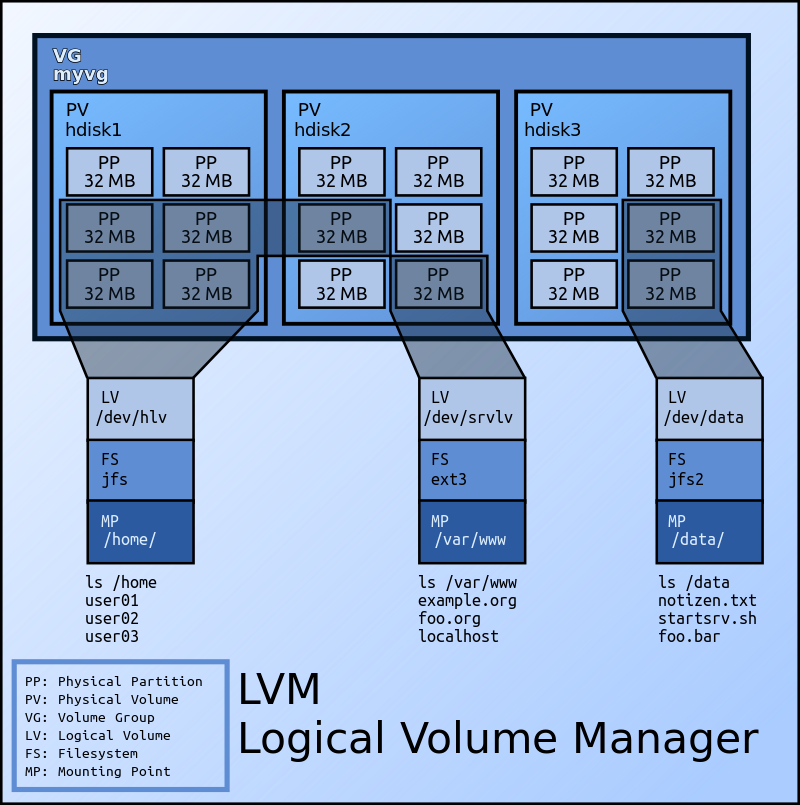

Fig. 1: LVM Elements(from wikipedia)

Fig. 1: LVM Elements(from wikipedia)

In Figure 1 - Three Physical Volumes: hdisk 1-3 are combined into a Volume Group called myvg. Three Logical Volumes are created from that VG, called /dev/hlv, /dev/srvlv, and /dev/data. Those LVs are mounted on /home, /var/www, and /data.

If one of those LV needs more disk space, you can just add a new PV to the VG, and expand the LV without unmounting the disk.

You’re not limited to a single Volume Group. For example, after noticing that the var/lib/docker/overlay2/ directory which contains docker images, was filling up your root filesystem, you can create a new group and logical volume to store the images.

Check Your system

I’m on a Ubuntu Virtual Machine. I choose LVM during the installation. You can run the following commands if you have a similar setup:

root@ubuntu-local:~# ls -l /dev/mapper/

total 0

crw------- 1 root root 10, 236 Apr 28 06:49 control

lrwxrwxrwx 1 root root 7 Apr 28 06:49 ubuntu--vg-ubuntu--lv -> ../dm-0

# exclude loop devices

root@ubuntu-local:~# lsblk --exclude=7

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 1G 0 part /boot

└─sda3 8:3 0 19G 0 part

└─ubuntu--vg-ubuntu--lv 253:0 0 19G 0 lvm /

sdb 8:16 0 1G 0 disk

sdc 8:32 0 1G 0 disk

sdd 8:48 0 1G 0 disk

sde 8:64 0 1G 0 disk

sr0 11:0 1 1024M 0 rom

root@ubuntu-local:~# dmsetup ls

ubuntu--vg-ubuntu--lv (253:0)

root@ubuntu-local:~# dmsetup info -c

Name Maj Min Stat Open Targ Event UUID

ubuntu--vg-ubuntu--lv 253 0 L--w 1 1 0 LVM-A4nyBjXd6TwvGTc3vHCF5d31lP1e6uoPyOWc574Zm5cfIm3Zw2vTbcTjbBbCw1fh

root@ubuntu-local:~# findmnt /

TARGET SOURCE FSTYPE OPTIONS

/ /dev/mapper/ubuntu--vg-ubuntu--lv ext4 rw,relatime

The / is on LVM. To get more information you can run, pvs vgs, and lvs to get information about specific LVM Components:

root@ubuntu-local:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 ubuntu-vg lvm2 a-- <19.00g 0

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

ubuntu-vg 1 1 0 wz--n- <19.00g 0

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

I’ll shed some light on this later.

Constructing a Logical Volume

To create Logical Volumes, we need to:

- Create one partition that uses the whole disk, and label it as

lvm - Initialize the disk or multiple disks as a Physical Volumes

- Create a Volume Group

- Create a Logical Volume

- Format the Logical Volume with a filesystem

- Put an entry in

/etc/fstab

Note: According to Red Hat, if you are using the whole disk, it must have no partition. You can skip that part.

Let’s partition /dev/sdb. I’ll be using the whole disk. First let’s give it a label:

root@ubuntu-local:~# parted /dev/sdb mklabel msdos

Information: You may need to update /etc/fstab.

root@ubuntu-local:~# parted /dev/sdb print

Model: VMware, VMware Virtual S (scsi)

Disk /dev/sdb: 1074MB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

Since I’m using the whole disk, label is not a priority here. Every disk needs a label. If you’ll be working with multiple partitions, msdos/MBR will allow up to 16 partitions, and GPT will allow up to 128 partitions.

Next, I’m gonna create the first and only partition:

root@ubuntu-local:~# parted /dev/sdb mkpart primary 0% 100%

Information: You may need to update /etc/fstab.

root@ubuntu-local:~# parted /dev/sdb print

Model: VMware, VMware Virtual S (scsi)

Disk /dev/sdb: 1074MB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

1 1049kB 1074MB 1073MB primary

Let’s set the lvm flag:

root@ubuntu-local:~# parted /dev/sdb set 1 lvm on

Information: You may need to update /etc/fstab.

root@ubuntu-local:~# parted /dev/sdb print

Model: VMware, VMware Virtual S (scsi)

Disk /dev/sdb: 1074MB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

1 1049kB 1074MB 1073MB primary lvm

Run lsblk to verify:

root@ubuntu-local:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

---REDACTED---

sdb 8:16 0 1G 0 disk

└─sdb1 8:17 0 1023M 0 part

Disk Partitioning is done. Let’s create the physical volume with pvcreate:

root@ubuntu-local:~# pvcreate -v /dev/sdb1

Wiping signatures on new PV /dev/sdb1.

Set up physical volume for "/dev/sdb1" with 2095104 available sectors.

Zeroing start of device /dev/sdb1.

Writing physical volume data to disk "/dev/sdb1".

Physical volume "/dev/sdb1" successfully created.

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 ubuntu-vg lvm2 a-- <19.00g 0

/dev/sdb1 lvm2 --- 1023.00m 1023.00m

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# pvscan

PV /dev/sda3 VG ubuntu-vg lvm2 [<19.00 GiB / 0 free]

PV /dev/sdb1 lvm2 [1023.00 MiB]

Total: 2 [<20.00 GiB] / in use: 1 [<19.00 GiB] / in no VG: 1 [1023.00 MiB]

Then run pvs to confirm the action. If you can’t find the volume, issue a pvscan. As you can see, there’s no VG assigned to it yet.

The pvcreate part is not mandatory. You can just issue a vgcreate(see below) to create both the Physical Volume and Volume Group. pvcreate is only run first, if you want to change the size of the Physical Extents or the number/size of the LVM Metadata. For example, pvcreate -s 8m /dev/sdb1, to set the Physical Extents size to 8MB.

Now, We need to create a Volume Group that consists of the physical volume created above.

The syntax is vgcreate [vg name] [pv]. Then run a vgs to confirm the action, or vgscan if there’s no changes:

root@ubuntu-local:~# vgcreate -v test_vg /dev/sdb1

Wiping signatures on new PV /dev/sdb1.

Adding physical volume '/dev/sdb1' to volume group 'test_vg'

Archiving volume group "test_vg" metadata (seqno 0).

Creating volume group backup "/etc/lvm/backup/test_vg" (seqno 1).

Volume group "test_vg" successfully created

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

test-vg 1 0 0 wz--n- 1020.00m 1020.00m

ubuntu-vg 1 1 0 wz--n- <19.00g 0

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# vgscan

Found volume group "test-vg" using metadata type lvm2

Found volume group "ubuntu-vg" using metadata type lvm2

The Volume Group is set. Let’s create the Logical Volume. The syntax is lvcreate -L [size] -n [name] vgname or lvcreate -l [size] -n [name] vgname.

- -L: The size. For e.g 1500M or 1.1G.

- -l: The number of Physical Extents or Percent.

root@ubuntu-local:~# lvcreate -l 100%FREE -n test_lv test_vg

Logical volume "test_lv" created.

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

test_lv test_vg -wi-a----- 1020.00m

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

root@ubuntu-local:~#

root@ubuntu-local:~# lvscan

ACTIVE '/dev/test_vg/test_lv' [1020.00 MiB] inherit

ACTIVE '/dev/ubuntu-vg/ubuntu-lv' [<19.00 GiB] inherit

-l 100%FREE means use all the space available on the volume group. Now, let’s mount the Logical Volume and format it with a filesystem:

root@ubuntu-local:~# mkfs.ext4 /dev/mapper/test_vg-test_lv

mke2fs 1.45.5 (07-Jan-2020)

Creating filesystem with 261120 4k blocks and 65280 inodes

Filesystem UUID: b4cf65ce-abab-4da1-8c6f-a4ba10a05f75

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Creating journal (4096 blocks): done

Writing superblocks and filesystem accounting information: done

root@ubuntu-local:~# mkdir /test-lvm01; mount /dev/mapper/test_vg-test_lv /test-lvm01

Run df -h to verify:

root@ubuntu-local:~# df -h | grep test

/dev/mapper/test_vg-test_lv 988M 2.6M 919M 1% /test-lvm01

Append the following line in /etc/fstab:

## LVM Test

/dev/mapper/test_vg-test_lv /test-lvm01 ext4 defaults 1 2

You can set the mount options according to your needs. I’m gonna stick with the default here. UUID is not recommended because of Snapshots.

Extend a Volume Group, Logical Volume and Resize the Filesystem

To extend a Volume Group, we need to add a Physical Volume. I’ll be partitioning /dev/sdc(not shown). The command vgextend is ran to extend a volume group. The syntax is vgextend [vgname] [disk]. First, I’ll issue a vgs:

root@ubuntu-local:~# vgs test_vg

VG #PV #LV #SN Attr VSize VFree

test_vg 1 1 0 wz--n- 1020.00m 0

As you can see, there’s only 1 PV and 0 free space. Extending the volume group:

root@ubuntu-local:~# vgextend test_vg /dev/sdc1

Physical volume "/dev/sdc1" successfully created.

Volume group "test_vg" successfully extended

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# vgs test_vg

VG #PV #LV #SN Attr VSize VFree

test_vg 2 1 0 wz--n- 1.99g 1020.00m

The Physical Volume is created. We don’t need to run pvcreate manually. Now there’s 2 PV and around 1G of free space.

To extend a Logical Volume, the command lvextend is used. After the extension, the filesystem must be resized. Normally, after the extension you would run a command like resizefs(for ext2/3/4) to extend the filesystem. The -r option of lvextend will do that automatically. Under the hood, it’s calling fsadm on the disk. fsadm supports ext/3/4, ReiserFS, and XFS filesystems.

The syntax is:

-

lvextend -l size -r [vg/lv] -

lvextend -l +size -r [vg/lv]

The -l size or -L size will the Logical Volume to exactly the size you specified. For e.g, If the lv was 7G, -l 8G will resize the LV to 8G in size. If the specified size is lower than the actual size, it will reduce it instead.

-l +size or -L +size, will add the specified size to the LV. For e.g, by running -L +3G on an 8GB LV, the result will be 11G.

Let’s run it:

root@ubuntu-local:~# lvextend -l +100%FREE -r test_vg/test_lv

Size of logical volume test_vg/test_lv changed from 1020.00 MiB (255 extents) to 1.99 GiB (510 extents).

Logical volume test_vg/test_lv successfully resized.

resize2fs 1.45.5 (07-Jan-2020)

Filesystem at /dev/mapper/test_vg-test_lv is mounted on /test-lvm01; on-line resizing required

old_desc_blocks = 1, new_desc_blocks = 1

The filesystem on /dev/mapper/test_vg-test_lv is now 522240 (4k) blocks long.

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

test_lv test_vg -wi-ao---- 1.99g

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

root@ubuntu-local:~#

Rename a Volume Group and a Logical Volume

I’ll rename the Volume Group test_vg to vg_home02 and the Logical Volume test_lv to lv_home02:

root@ubuntu-local:~# vgrename test_vg vg_home02

Volume group "test_vg" successfully renamed to "vg_home02"

root@ubuntu-local:~#

root@ubuntu-local:~# lvrename /dev/vg_home02/test_lv /dev/vg_home02/lv_home02

Renamed "test_lv" to "lv_home02" in volume group "vg_home02"

root@ubuntu-local:~#

Don’t forger to edit it’s entry in fstab.

Remove LV, VG, and PV

I’ll be removing /dev/vg_home02/lv_home02. I won’t be performing a backup. The steps are as follows:

- Unmount the mount point

- Remove the LV with

lvremove - Remove the VG with

vgremove - Remove the PV with

pvremove

I encountered an interesting problem while performing an lvremove. Follow along.

First, unmount the mount point:

umount /home02

Remove the LV:

root@ubuntu-local:~# lvremove /dev/vg_home02/lv_home02

Logical volume vg_home02/lv_home02 contains a filesystem in use.

The filesystem was unmounted successfully. I don’t know why I’m getting filesystem in use. After verifying with lsof, and fuser, I still didn’t get it. But while issuing an lsblk, the LV was mounted on a different mount point:

root@ubuntu-local:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

---OUTPUT REDACTED---

sdb 8:16 0 1G 0 disk

└─sdb1 8:17 0 1023M 0 part

└─vg_home02-lv_home02 253:1 0 2G 0 lvm /test-lvm01

sdc 8:32 0 1G 0 disk

└─sdc1 8:33 0 1023M 0 part

└─vg_home02-lv_home02 253:1 0 2G 0 lvm /test-lvm01

/test-lvm01 was a previous mount point that I used earlier. Do LVM do this automatically ? I can’t find anything on this type of behaviour. I unmounted it, and ran lvremove:

root@ubuntu-local:~# lvremove /dev/vg_home02/lv_home02

Do you really want to remove and DISCARD active logical volume vg_home02/lv_home02? [y/n]: y

Logical volume "lv_home02" successfully removed

The LV was indeed deleted:

root@ubuntu-local:~# lvscan

ACTIVE '/dev/ubuntu-vg/ubuntu-lv' [<19.00 GiB] inherit

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

Removing the Volume Group with vgremove:

root@ubuntu-local:~# vgremove vg_home02

Volume group "vg_home02" successfully removed

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

ubuntu-vg 1 1 0 wz--n- <19.00g 0

Removing the Physical Volumes:

root@ubuntu-local:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 ubuntu-vg lvm2 a-- <19.00g 0

/dev/sdb1 lvm2 --- 1023.00m 1023.00m

/dev/sdc1 lvm2 --- 1023.00m 1023.00m

root@ubuntu-local:~# pvremove /dev/sdb1 /dev/sdc1

Labels on physical volume "/dev/sdb1" successfully wiped.

Labels on physical volume "/dev/sdc1" successfully wiped.

root@ubuntu-local:~#

Move /home to a new LVM partition

Create a PV and VG:

root@ubuntu-local:~# vgcreate vghome /dev/sdd

Physical volume "/dev/sdd" successfully created.

Volume group "vghome" successfully created

Create the LV:

root@ubuntu-local:~# lvcreate -l 100%FREE vghome -n lvhome

Logical volume "lvhome" created.

Format it:

root@ubuntu-local:~# mkfs.ext4 /dev/mapper/vghome-lvhome

mke2fs 1.45.5 (07-Jan-2020)

Creating filesystem with 5241856 4k blocks and 1310720 inodes

Filesystem UUID: bebf060e-c851-456e-98da-53c5c88d4a34

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

Mount the LV on /mnt:

mount /dev/mapper/vghome-lvhome

Copy everything on /home to /mnt(or something else if /mnt is occupied):

rsync -av /home/* /mnt

# OR

cp -r /home/* /mnt

# run a diff

diff -r /home /mnt

# ignoring lost+found

If everythings looks ok, rename /home, recreate one, and mount the LV:

root@ubuntu-local:~# mv /home /home_orig

root@ubuntu-local:~# mkdir /home

root@ubuntu-local:~# mount /dev/mapper/vghome-lvhome /home

root@ubuntu-local:~# diff -r /home /home_orig

root@ubuntu-local:~# df -h /home

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vghome-lvhome 20G 111M 19G 1% /home

Modify /etc/fstab:

/dev/mapper/vghome-lvhome ext4 /home defaults 1 2

You can now delete /home_orig.

Move /home to a new LV from the default Volume Group

It’s pretty much the same step as above. The only difference is that you’ll be extending the volume group with a new disk, or just create an LV if there’s enough space. On Ubuntu 20.04, the default LVM scheme upon installation is /dev/mapper/ubuntu--vg-ubuntu--lv.

- Extend the VG

vgextend ubuntu-vg /dev/sdd. Skip this step if you have enough space. - Create the LV

lvcreate -l 100%FREE -n ubuntu-lvhome ubuntu-vg /dev/sdd. - Run

lvs -a -o +devicesto verify if it’s using the disk you specified. - Create a filesystem

mkfs.ext4 /dev/mapper/ubuntu--vg-ubuntu--lvhome. - Rename

/hometo/home_orig, and recreate a/homedirectory. - Mount the LV on a temporary mount point.

- Copy the data of

/home_origon the LV, and run adiff -r /home_orig [LV mountpoint]. - Mount the LV on

/home. - Run

df -h, and re-rundiffjust to be sure. - Modify

/etc/fstab. - Remove

/home_orig.

LVM Configuration and filters

The configuration is in /etc/lvm/lvm.conf. You can run lvmconfig to get a listing of the active entries at the moment. There’s a lot of entries that are commented out in config file, but are actually active internally. Those are default settings. For example, if I grep for filters:

root@ubuntu-local:~# lvmconfig --type default | grep filter

# filter=["a|.*|"]

# global_filter=["a|.*|"]

They’re active by default. The filter is currently allowing LVM to work with all types of devices on this host. The a in ["a|.*|"] stands for accept. Let’s add a reject rule for loops devices. Open /etc/lvm/lvm.conf, look for filter. Make a copy, and the append the following reject rule(the last line):

Example

# Accept every block device:

# filter = [ "a|.*|" ]

# Reject the cdrom drive:

# filter = [ "r|/dev/cdrom|" ]

# Work with just loopback devices, e.g. for testing:

# filter = [ "a|loop|", "r|.*|" ]

# Accept all loop devices and ide drives except hdc:

# filter = [ "a|loop|", "r|/dev/hdc|", "a|/dev/ide|", "r|.*|" ]

# Use anchors to be very specific:

# filter = [ "a|^/dev/hda8$|", "r|.*|" ]

#

# This configuration option has an automatic default value.

# filter = [ "a|.*|" ]

filter = ["r|^/dev/loop|", "a|.*|" ]

Verify the syntax:

root@ubuntu-local:~# lvmconfig --validate

LVM configuration valid.

root@ubuntu-local:~# lvmconfig --type default | grep filter

# filter=["a|.*|"]

# global_filter=["a|.*|"]

root@ubuntu-local:~# lvmconfig | grep filter

filter=["r|^/dev/loop|","a|.*|"]

As you can see, the last output takes precedence over the defaults.

Let’s test it by first creating a raw device with fallocate:

root@ubuntu-local:~# fallocate -l 100M /tmp/testdevice

Set it up as a loop device with losetup:

root@ubuntu-local:~# losetup -f /tmp/testdevice

and its rejected:

root@ubuntu-local:~# pvcreate /dev/loop0 -v

Device /dev/loop0 excluded by a filter.

Display and change Metadata with [pv/vg/lv]display, and [pv/vg/lv]change

To get more information such as the size of Physical Extents, Logical Extents, if the LVs has read/write access or readonly, use: pvdisplay, vgdisplay, and lvdisplay.

With pvchange, vgchange, and lvchange, you can change attributes such as readonly, read/write, activate/desactive an LV, modifying UUIDs, etc.

Move data on a different disk with pvmove

Before you use pvmove there’re certain things to put into consideration:

-

For e.g, if you’re moving

/hometo another disk, you won’t be able to perform the operation if you’re on an ssh connection or logged it as a user who’s using/home. -

If there are applications or processes using the disk, you have to stop it. Run

lsofto verify. -

After unmounting, you’ll have to use your hypersivor’s console or a live cd, or reboot the machine to able to run an

lvreduceorpvmove.

I’ll working on /dev/mapper/ubuntu--vg-ubuntu--lvhome, which is mounted on /home:

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

ubuntu-vg 2 2 0 wz--n- 38.99g 0

root@ubuntu-local:~#

root@ubuntu-local:~# lvs -a -o +devices

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert Devices

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g /dev/sda3(0)

ubuntu-lvhome ubuntu-vg -wi-ao---- <20.00g /dev/sdd(0)

root@ubuntu-local:~# df -h /home

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ubuntu--vg-ubuntu--lvhome 20G 111M 19G 1% /home

It’s using the physical extents of /dev/sdd, which is 20G in size, and only 111M is used. I’ll the move the data on /dev/sdf which is 10G in size. First let’s run an lsof on /home before unmounting:

root@ubuntu-local:~# lsof /home

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

bash 2005 kavish cwd DIR 253,1 4096 262145 /home/kavish

sudo 3276 root cwd DIR 253,1 4096 262145 /home/kavish

You can kill bash it’s not a problem. Once nothing is accessing /home, unmount it, and comment out it’s entry in /etc/fstab:

umount /home ; mount | grep home

Add the new disk to the VG:

root@ubuntu-local:~# vgextend ubuntu-vg /dev/sdf

Physical volume "/dev/sdf" successfully created.

Volume group "ubuntu-vg" successfully extended

Since, the LV is only using 111M, you can reduce the LV to only 1G, and then move the extents to the new disk. This will accelerate the pvmove process:

root@ubuntu-local:~# lvreduce -L 1G -r /dev/ubuntu-vg/ubuntu-lvhome

fsck from util-linux 2.34

/dev/mapper/ubuntu--vg-ubuntu--lvhome is in use.

e2fsck: Cannot continue, aborting.

Filesystem check failed.

The LVM will always be in use. Reboot the machine, or use your hypervisor’s console, then rerun the above command:

root@ubuntu-local:~# lvreduce -L 1G -r /dev/ubuntu-vg/ubuntu-lvhome

fsck from util-linux 2.34

/dev/mapper/ubuntu--vg-ubuntu--lvhome: 3065/1310720 files (0.0% non-contiguous), 143287/5241856 blocks

resize2fs 1.45.5 (07-Jan-2020)

Resizing the filesystem on /dev/mapper/ubuntu--vg-ubuntu--lvhome to 262144 (4k) blocks.

The filesystem on /dev/mapper/ubuntu--vg-ubuntu--lvhome is now 262144 (4k) blocks long.

Size of logical volume ubuntu-vg/ubuntu-lvhome changed from <20.00 GiB (5119 extents) to 1.00 GiB (256 extents).

Logical volume ubuntu-vg/ubuntu-lvhome successfully resized.

Move the data with pvmove:

root@ubuntu-local:~# pvmove /dev/sdd /dev/sdf

/dev/sdd: Moved: 20.70%

/dev/sdd: Moved: 100.00%

Remove the old device:

root@ubuntu-local:~# vgreduce ubuntu-vg /dev/sdd

Removed "/dev/sdd" from volume group "ubuntu-vg"

Resize the LV with lvextend or lvresize:

root@ubuntu-local:~# lvextend -l +100%FREE -r ubuntu-vg/ubuntu-lvhome

fsck from util-linux 2.34

/dev/mapper/ubuntu--vg-ubuntu--lvhome: clean, 3065/65536 files, 58981/262144 blocks

Size of logical volume ubuntu-vg/ubuntu-lvhome changed from 1.00 GiB (256 extents) to <10.00 GiB (2559 extents).

Logical volume ubuntu-vg/ubuntu-lvhome successfully resized.

resize2fs 1.45.5 (07-Jan-2020)

Resizing the filesystem on /dev/mapper/ubuntu--vg-ubuntu--lvhome to 2620416 (4k) blocks.

The filesystem on /dev/mapper/ubuntu--vg-ubuntu--lvhome is now 2620416 (4k) blocks long.

Confirm it with an lvs:

root@ubuntu-local:~# lvs -a -o +devices

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert Devices

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g /dev/sda3(0)

ubuntu-lvhome ubuntu-vg -wi-a----- <10.00g /dev/sdf(0)

Uncomment its entry in /etc/fstab, and mount it:

root@ubuntu-local:~# mount -av | grep home

/home : successfully mounted

Confirm it with df -h /home:

root@ubuntu-local:~# df -h /home

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ubuntu--vg-ubuntu--lvhome 9.8G 103M 9.1G 2% /home

Extend or Shrink root LVM in rescue mode

https://tunnelix.com/recover-logical-volumes-data-from-deleted-lvm-partition/

https://unix.stackexchange.com/questions/67095/how-to-expand-ext4-partition-size-using-command-line

https://www.msp360.com/resources/blog/linux-resize-partition/

Configure and remove LVM with Ansible

The playbook:

---

- name: LVM Test

hosts: all

user: root

tasks:

- name: Creating VG

lvg:

vg: vgtest

pvs: /dev/sdg

- name: Creating LV

lvol:

vg: vgtest

lv: lvtest

size: 5G

- name: Creating Mount Point

file:

path: /lvmtest

state: directory

- name: Creating file system

filesystem:

fstype: ext4

dev: /dev/vgtest/lvtest

- name: Mounting LV

mount:

path: /lvmtest

src: /dev/vgtest/lvtest

fstype: ext4

state: mounted

Executing on localhost:

root@ubuntu-local:~/playbooks/lvm# ansible-playbook lvm_test.yml -c local

PLAY [LVM Test] ************************************************************************************

TASK [Gathering Facts] *****************************************************************************

ok: [192.168.100.104]

TASK [Creating VG] *********************************************************************************

changed: [192.168.100.104]

TASK [Creating LV] *********************************************************************************

changed: [192.168.100.104]

TASK [Creating Mount Point] ************************************************************************

changed: [192.168.100.104]

TASK [Creating file system] ************************************************************************

changed: [192.168.100.104]

TASK [Mounting LV] *********************************************************************************

changed: [192.168.100.104]

PLAY RECAP *****************************************************************************************

192.168.100.104 : ok=6 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Confirm with df -h /lvmtest:

root@ubuntu-local:~/playbooks/lvm# ansible test -m shell -a "df -h | grep lvm" -c local

192.168.100.104 | CHANGED | rc=0 >>

/dev/mapper/vgtest-lvtest 4.9G 20M 4.6G 1% /lvmtest

and /etc/fstab:

root@ubuntu-local:~/playbooks/lvm# ansible test -m shell -a "cat /etc/fstab | grep lvmtest" -c local

192.168.100.104 | CHANGED | rc=0 >>

/dev/vgtest/lvtest /lvmtest ext4 defaults 0 0

Removing an LV, VG, and PV

---

- name: LVM Test

hosts: all

user: root

tasks:

- name: Unmount LV

mount:

path: /lvmtest

state: absent

- name: Removing LV

lvol:

vg: vgtest

lv: lvtest

state: absent

force: yes

- name: Removing VG

lvg:

vg: vgtest

state: absent

- name: Removing PV

command: pvremove /dev/sdg

Thinly Provisioned Volumes

Thin Provisioning, also called dynamic provisioning, allows space to be allocated automatically. It’s mostly used to save storage space by creating a disk with a virtual size. It’s to pretend to allocate disk space, without actually doing so. Only when data is being written, then the space will used, but without exceeding the space specified when the virtual disk was allocated or created.

In LVM, we have to create a Volume Group. That particular Volume Group will contain a Thin Pool, or an LV with the t attribute. Then we’ll use that Thin Pool to create Thin Volumes or virtual disks.

I’ll create a 1G VG called thinpool-vg:

root@ubuntu-local:~# vgcreate thinpool-vg /dev/sdh1

Physical volume "/dev/sdh1" successfully created.

Volume group "thinpool-vg" successfully created

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

thinpool-vg 1 0 0 wz--n- 996.00m 996.00m

Next, I’m gonna create a 500M Thin Pool called thinpool-lv with --thinpool to specify the name of the LV:

root@ubuntu-local:~# lvcreate -L 500M --thinpool thinpool-lv thinpool-vg

Thin pool volume with chunk size 64.00 KiB can address at most 15.81 TiB of data.

Logical volume "thinpool-lv" created.

By issuing an lvs, we can see that thinpool-lv has the t(thin pool) attribute:

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-a-tz-- 500.00m 0.00 10.84

Note: To get more details on attributes, run

man lvs, and look forlv_attr.

It contains no data, around 10% of the space is used for metadata. Now, let’s create some thin volumes from thinpool-lv with --thin to specify the Thin Pool created earlier, and -V for virtual size:

root@ubuntu-local:~# for i in `seq 1 5`; do lvcreate -V 100M --thin thinpool-vg/thinpool-lv -n thinvl$i; done

Logical volume "thinvl1" created.

Logical volume "thinvl2" created.

Logical volume "thinvl3" created.

Logical volume "thinvl4" created.

Logical volume "thinvl5" created.

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-aotz-- 500.00m 0.00 11.33

thinvl1 thinpool-vg Vwi-a-tz-- 100.00m thinpool-lv 0.00

thinvl2 thinpool-vg Vwi-a-tz-- 100.00m thinpool-lv 0.00

thinvl3 thinpool-vg Vwi-a-tz-- 100.00m thinpool-lv 0.00

thinvl4 thinpool-vg Vwi-a-tz-- 100.00m thinpool-lv 0.00

thinvl5 thinpool-vg Vwi-a-tz-- 100.00m thinpool-lv 0.00

Five Thin Volumes of 100M were created. The V attribute stands for Virtual Disk and the pool section is showing thinpool-lv. To create a filesystem, you simply specify /dev/mapper/vg/lv. You don’t have to mention thinpool-lv. The attributes will take care of that.

Everything is well provisioned. A 500M Thin Pool is virtually allocated among 5 Thin Volumes. Let’s format and mount the disks:

root@ubuntu-local:~# mkdir /mnt{1..5}

root@ubuntu-local:~# for i in `seq 1 5`; do mkfs.ext4 /dev/mapper/thinpool--vg-thinvl$i; done

root@ubuntu-local:~# for i in `seq 1 5`; do mount /dev/mapper/thinpool--vg-thinvl$i /mnt$i; done

Issuing a df -h | grep mnt and lvs:

root@ubuntu-local:~# df -h | grep mnt

/dev/mapper/thinpool--vg-thinvl1 93M 72K 86M 1% /mnt1

/dev/mapper/thinpool--vg-thinvl2 93M 72K 86M 1% /mnt2

/dev/mapper/thinpool--vg-thinvl3 93M 72K 86M 1% /mnt3

/dev/mapper/thinpool--vg-thinvl4 93M 72K 86M 1% /mnt4

/dev/mapper/thinpool--vg-thinvl5 93M 72K 86M 1% /mnt5

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-aotz-- 500.00m 7.31 11.33

thinvl1 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

thinvl2 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

thinvl3 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

thinvl4 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

thinvl5 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

The data consumption of the Thin Pool is aroung 7%, and 86M of space is avilable on each Virtual Disk. Even though 500M is distrubuted among 5 Thin Volumes, you can provisioned more data that may actually exist on the Thin Pool. Let’s say, thinvl-1 is using 50M, and the second is only using 75M, that makes a total of 125M. 375M is freely available to distribute. It’s possible to do this, because everything is virtual.

Let’s create some fake data on all mount points, and take a look at the output of lvs:

root@ubuntu-local:~# for i in `seq 1 5`; do head -c 10M /dev/urandom > /mnt$i/fakefile0.txt; done

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# df -h | grep mnt

/dev/mapper/thinpool--vg-thinvl1 93M 11M 76M 12% /mnt1

/dev/mapper/thinpool--vg-thinvl2 93M 11M 76M 12% /mnt2

/dev/mapper/thinpool--vg-thinvl3 93M 11M 76M 12% /mnt3

/dev/mapper/thinpool--vg-thinvl4 93M 11M 76M 12% /mnt4

/dev/mapper/thinpool--vg-thinvl5 93M 11M 76M 12% /mnt5

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-aotz-- 500.00m 17.31 12.30

thinvl1 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl2 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl3 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl4 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl5 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

ubuntu-lvhome ubuntu-vg -wi-ao---- <10.00g

root@ubuntu-local:~#

Now, 17% of data is used, and 380M of total space is available. You can add more PVs in the VG and extend the Thin Pool, but you can also create more Thin Volumes, because there’s enough space. But lvm will give a warning. You are providing more that you have. This is called Over Provisioning.

Over Provisioning and Auto Growth

When over provisioning is in place, monitoring is on the line. In the above section I created a Thin Pool of 500M, and 5 Thin Volumes of 100M each. Everything is ok for now. Even if all the disk are about to be filled up, it won’t be a problem.

Let’s say I add one more Thin Volume of 100M. Now, it’s 600M in total, but I only provided a 500M virtual disk. Even then, it won’t cause a problem, because I assumed, the users using the disks won’t exceed 500M. But even if they do, LVM will extend the Thin Pool automatically. It’s called Thin Pool Auto Extension.

That feature is enabled by default in /etc/lvm/lvm.conf:

root@ubuntu-local:~# cat /etc/lvm/lvm.conf | grep "thin_pool_auto" | grep -v "#"

thin_pool_autoextend_threshold = 80

thin_pool_autoextend_percent = 20

Those two lines works together. When a Thin Pool reaches or exceeds its usage by 80%, the pool will be extended by 20%. You need to have free space on the Volume Group to achieve this.

Let’s create one more Thin Volume, and see what happens:

root@ubuntu-local:~# lvcreate -V 100M --thin thinpool-vg/thinpool-lv -n thin-vl6

WARNING: Sum of all thin volume sizes (600.00 MiB) exceeds the size of thin pool thinpool-vg/thinpool-lv and the amount of free space in volume group (488.00 MiB).

Logical volume "thin-vl6" created.

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thin-vl6 thinpool-vg Vwi-a-tz-- 100.00m thinpool-lv 0.00

thinpool-lv thinpool-vg twi-aotz-- 500.00m 17.31 12.40

thinvl1 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl2 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl3 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl4 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

thinvl5 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 17.31

I get a warning, that all the thin volumes together are exceeding the size of the thin pool, and around 400M of data in available in the volume group.

Give it a filesystem, and mount it:

root@ubuntu-local:~# mkfs.ext4 /dev/mapper/thinpool--vg-thin--vl6

mke2fs 1.45.5 (07-Jan-2020)

Discarding device blocks: done

Creating filesystem with 25600 4k blocks and 25600 inodes

Allocating group tables: done

Writing inode tables: done

Creating journal (1024 blocks): done

Writing superblocks and filesystem accounting information: done

root@ubuntu-local:~# mkdir /mnt6

root@ubuntu-local:~# mount /dev/mapper/thinpool--vg-thin--vl6 /mnt6

Filling up the disks with a bunch of random data, and see what lvs gives us:

root@ubuntu-local:~# for i in `seq 1 6`; do head -c 70M /dev/urandom > /mnt$i/fa1kefi23le1.txt; done

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thin-vl6 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 77.31

thinpool-lv thinpool-vg twi-aotz-- 720.00m 71.37 17.19

thinvl1 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 87.31

thinvl2 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 87.31

thinvl3 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 87.31

thinvl4 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 87.31

thinvl5 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 87.31

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

ubuntu-lvhome ubuntu-vg -wi-ao---- <10.00g

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

thinpool-vg 1 7 0 wz--n- 996.00m 268.00m

ubuntu-vg 2 2 0 wz--n- 28.99g 0

root@ubuntu-local:~#

Now the size of the Thin Pool is 720M. That’s pretty cool. I mistyped the name of the 6th volume. Renaming it:

root@ubuntu-local:~# lvrename /dev/thinpool-vg/thin-vl6 thinpool-vg/thinvl6

Renamed "thin-vl6" to "thinvl6" in volume group "thinpool-vg"

Extending a Thin Pool

To extend a Thin Pool, is very simple. You first have to extend the Thin Pool’s metadata, then extend the Thin Pool.

During the creation of the Thin Pool, its metadata size is set according to the following formula:

(Pool_LV_size / Pool_LV_chunk_size * 64)

The maximum supported value is 17G. I prefer to add 10M per GB. If I’ll extend a 100G pool to 150G, I’ll extend the metadata size by at least 50M.

Run lvs -a to view the current usage:

root@ubuntu-local:~# lvs -a | grep meta

[thinpool-lv_tmeta] thinpool-vg ewi-ao---- 4.00m

Now, I’ll extend the VG by 10G:

root@ubuntu-local:~# vgextend thinpool-vg /dev/sdg

Physical volume "/dev/sdg" successfully created.

Volume group "thinpool-vg" successfully extended

root@ubuntu-local:~#

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

thinpool-vg 2 7 0 wz--n- <10.97g <10.26g

ubuntu-vg 2 2 0 wz--n- 28.99g 0

Extending the metadata by 10M:

root@ubuntu-local:~# lvextend --poolmetadatasize +10M thinpool-vg/thinpool-lv

Rounding size to boundary between physical extents: 12.00 MiB.

Size of logical volume thinpool-vg/thinpool-lv_tmeta changed from 4.00 MiB (1 extents) to 16.00 MiB (4 extents).

Logical volume thinpool-vg/thinpool-lv_tmeta successfully resized.

root@ubuntu-local:~#

root@ubuntu-local:~# lvs -a | grep meta

[thinpool-lv_tmeta] thinpool-vg ewi-ao---- 16.00m

Extending the Thin Pool by 1G:

root@ubuntu-local:~# lvextend -L +1G /dev/thinpool-vg/thinpool-lv

Size of logical volume thinpool-vg/thinpool-lv_tdata changed from 720.00 MiB (180 extents) to 1.70 GiB (436 extents).

Logical volume thinpool-vg/thinpool-lv_tdata successfully resized.

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-aotz-- 1.70g 29.47 11.82

--REDACTED---

Removing a Thin Pool

First, unmount everything then remove the Thin Volumes:

root@ubuntu-local:~# for i in `seq 1 6`; do umount /mnt$i; done

root@ubuntu-local:~#

root@ubuntu-local:~# lvremove /dev/thinpool-vg/thinvl[1-6] -y

Logical volume "thinvl1" successfully removed

Logical volume "thinvl2" successfully removed

Logical volume "thinvl3" successfully removed

Logical volume "thinvl4" successfully removed

Logical volume "thinvl5" successfully removed

Logical volume "thinvl6" successfully removed

root@ubuntu-local:~#

Remove the Thin Pool:

root@ubuntu-local:~# lvremove /dev/thinpool-vg/thinpool-lv

Do you really want to remove and DISCARD active logical volume thinpool-vg/thinpool-lv? [y/n]: y

Logical volume "thinpool-lv" successfully removed

Then remove the VG, and verify with vgs, and lvs:

root@ubuntu-local:~# vgremove thinpool-vg

Volume group "thinpool-vg" successfully removed

root@ubuntu-local:~#

root@ubuntu-local:~# vgs

VG #PV #LV #SN Attr VSize VFree

ubuntu-vg 2 2 0 wz--n- 28.99g 0

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

ubuntu-lvhome ubuntu-vg -wi-ao---- <10.00g

root@ubuntu-local:~#

With Ansible

The playbook:

root@ubuntu-local:~/playbooks/lvm# cat thinpool.yml

---

- name: Deploying a Thin Pool and Thin Volume

hosts: all

become: true

tasks:

- name: Install Packages

apt:

name: thin-provisioning-tools

state: latest

- name: Creating VG

lvg:

vg: thinpool-vg

pvs: /dev/sdh1

- name: Creating Thin Pool

lvol:

vg: thinpool-vg

thinpool: thinpool-lv

size: 500m

- name: Creating Thin Volume

lvol:

vg: thinpool-vg

lv: thinvl-1

thinpool: thinpool-lv

size: 100m

- name: Creating a filesystem on the Thin Volume

filesystem:

fstype: ext4

dev: /dev/thinpool-vg/thinvl-1

- name: Mounting the Thin Volume

mount:

path: /mnt1

src: /dev/thinpool-vg/thinvl-1

state: mounted

Verify the syntax:

root@ubuntu-local:~/playbooks/lvm# ansible-playbook thinpool.yml --syntax-check

playbook: thinpool.yml

Executing the playbook:

root@ubuntu-local:~/playbooks/lvm# ansible-playbook thinpool.yml -c local

PLAY [Deploying a Thin Pool and Thin Volume] *******************************************************

TASK [Gathering Facts] *****************************************************************************

ok: [192.168.100.104]

TASK [Install Packages] ****************************************************************************

ok: [192.168.100.104]

TASK [Creating VG] *********************************************************************************

changed: [192.168.100.104]

TASK [Creating Thin Pool] **************************************************************************

changed: [192.168.100.104]

TASK [Creating Thin Volume] ************************************************************************

changed: [192.168.100.104]

TASK [Creating a filesystem on the Thin Volume] ****************************************************

changed: [192.168.100.104]

TASK [Mounting the Thin Volume] ********************************************************************

changed: [192.168.100.104]

PLAY RECAP *****************************************************************************************

192.168.100.104 : ok=7 changed=5 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

The result:

root@ubuntu-local:~/playbooks/lvm# vgs

VG #PV #LV #SN Attr VSize VFree

thinpool-vg 1 2 0 wz--n- 996.00m 488.00m

ubuntu-vg 2 2 0 wz--n- 28.99g 0

root@ubuntu-local:~/playbooks/lvm#

root@ubuntu-local:~/playbooks/lvm# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-aotz-- 500.00m 1.46 10.94

thinvl-1 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

ubuntu-lvhome ubuntu-vg -wi-ao---- <10.00g

Monitoring

https://engineerworkshop.com/blog/lvm-thin-provisioning-and-monitoring-storage-use-a-case-study/

Snapshots

A snapshot is the state of the system or disk captured at one particular point in time. LVM uses copy-on-write(COW) to create snapshots.

Once a snapshot is created, its data will appear identical as the original. But it’s just a reference to the original, not actual data. Only when you modify a file in the original, then the snapshot will contain a copy of the original data. And that’s it. That’s the only copy it will retain.

If you re-modify a file on the original or create a new one, the snapshot won’t make a copy of the newly modified file, nor the new one but space will be used. The reason for that is because Copy-On-Write snapshots contains the difference between the snapshot and the origin. I didn’t get it at first, so I asked a question on stackexchange. Here’s the answer:

Copy-on-write LVM snapshots contain the differences between the snapshot and the origin. So every time a change is made to either, the change is added to the snapshot, potentially increasing the space needed in the snapshot. (“Potentially” because changes to blocks that have already been snapshotted for a previous change don’t need more space, they overwrite the previous change — the goal is to track the current differences, not the history of all the changes.)

LVs aren’t aware of the structure of data stored inside them. Appending 5MiB to a file results in at least 5MiB of changes written to the origin, so the changed blocks need to be added to the snapshot (to preserve their snapshotted contents). Writing another 5MiB to the file results in another 5MiB (at least) of changes to the origin, which result in a similar amount of data being written to the snapshot (again, to preserve the original contents). The contents of the file, or indeed the volume, as seen in the snapshot, never change as a result of changes to the origin.

To create a snapshot, you have to give it a size. If the snapshot runs out of space, it will be unusable. At first, you can give it a size that feels reasonable, then extend it later if you want. Snapshots also works for Thin Volumes.

To create a snapshot, you have to execute lvcreate with the -s option. The syntax is:

lvcreate -L [size] -s -n [snapshot_name] [/dev/VG/LV]

Note: A snapshot can’t be named as

snapshot. The wordsnapshotis a reserved keyword. You can name it something likeetc-snapshot-2021_03_05.

I’ll be taking a snapshot of ubuntu-lvhome. It has a capacity of 10G, but only 103M is being used:

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

ubuntu-lvhome ubuntu-vg -wi-ao---- <10.00g

root@ubuntu-local:~# df -h | grep home

/dev/mapper/ubuntu--vg-ubuntu--lvhome 9.8G 103M 9.1G 2% /home

To be able to create a snapshot, you’ll need enough space on the VG. Let’s create a snapshot of 300M:

root@ubuntu-local:~# lvcreate -L 300M -s -n ubuntu-home-snap /dev/ubuntu-vg/ubuntu-lvhome

Logical volume "ubuntu-home-snap" created.

root@ubuntu-local:~#

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync

ubuntu-home-snap ubuntu-vg swi-a-s--- 300.00m ubuntu-lvhome 0.01

ubuntu-lvhome ubuntu-vg owi-aos--- <10.00g

root@ubuntu-local:~# dmsetup table | grep home

ubuntu--vg-ubuntu--home--snap-cow: 0 614400 linear 8:96 2048

ubuntu--vg-ubuntu--lvhome: 0 20963328 snapshot-origin 253:3

ubuntu--vg-ubuntu--home--snap: 0 20963328 snapshot 253:3 253:5 P 8

ubuntu--vg-ubuntu--lvhome-real: 0 20963328 linear 8:80 2048

As you can see, only 0.01% is being used on the snapshot. In the Origin section, the snapshot is pointing to the original. Just like in dmsetup, /dev/mapper will also show 4 devices:

root@ubuntu-local:~# ls -l /dev/mapper/ | grep home

lrwxrwxrwx 1 root root 7 May 22 07:55 ubuntu--vg-ubuntu--home--snap -> ../dm-6

lrwxrwxrwx 1 root root 7 May 22 07:55 ubuntu--vg-ubuntu--home--snap-cow -> ../dm-5

lrwxrwxrwx 1 root root 7 May 22 07:55 ubuntu--vg-ubuntu--lvhome -> ../dm-4

lrwxrwxrwx 1 root root 7 May 22 07:55 ubuntu--vg-ubuntu--lvhome-real -> ../dm-3

- home–snap: The snapshot that the user will interact with.

- home–snap-cow: The actual Copy-On-Write device that’s holding the data of the snapshot.

- lvhome: The logical Volume that the user will interact with.

- lvhome-real The real logical volume that

lvhomeretrieves data from.

You can find more information with dmsetup table:

root@ubuntu-local:~# dmsetup table | grep home

ubuntu--vg-ubuntu--home--snap-cow: 0 614400 linear 8:96 2048

ubuntu--vg-ubuntu--lvhome: 0 20963328 snapshot-origin 253:3

ubuntu--vg-ubuntu--home--snap: 0 20963328 snapshot 253:3 253:5 P 8

ubuntu--vg-ubuntu--lvhome-real: 0 20963328 linear 8:80 2048

You can read about it here.

To look at the original files, you have to mount it:

root@ubuntu-local:~# ls /home

admin01 admin02 kavish lost+found sysadmin_scripts

root@ubuntu-local:~#

root@ubuntu-local:~# mount /dev/mapper/ubuntu--vg-ubuntu--home--snap /snap

root@ubuntu-local:~#

root@ubuntu-local:~# ls /snap

admin01 admin02 kavish lost+found sysadmin_scripts

root@ubuntu-local:~#

Note: I recommend mounting the snapshot read-only, in case you make a mistake.

If you run df, it appears that you have to two exactly-same-size disk on the system, which is not true:

root@ubuntu-local:~# df -h | grep home

/dev/mapper/ubuntu--vg-ubuntu--lvhome 9.8G 103M 9.1G 2% /home

/dev/mapper/ubuntu--vg-ubuntu--home--snap 9.8G 103M 9.1G 2% /snap

Each time you add or modify a file, the snapshot keep tracks of all the changes, hence using space:

root@ubuntu-local:~# lvs | grep snap

ubuntu-home-snap ubuntu-vg swi-aos--- 300.00m ubuntu-lvhome 0.01

root@ubuntu-local:~#

root@ubuntu-local:~# head -c 10M /dev/urandom > /home/sysadmin_scripts/admin01.txt

root@ubuntu-local:~# head -c 10M /dev/urandom > /home/sysadmin_scripts/admin02.txt

root@ubuntu-local:~# lvs | grep snap

ubuntu-home-snap ubuntu-vg swi-aos--- 300.00m ubuntu-lvhome 3.37

root@ubuntu-local:~#

It jumped from 0.01% to 3.37%(at the end of the line). You have to monitor the usage with lvs, because df won’t show the exact usage:

root@ubuntu-local:~# df -h | grep home

/dev/mapper/ubuntu--vg-ubuntu--lvhome 9.8G 123M 9.1G 2% /home

/dev/mapper/ubuntu--vg-ubuntu--home--snap 9.8G 103M 9.1G 2% /snap

Autoextend Snapshots

Just like Thin Pools, autoextending snapshots automatically has similar configurations:

# 'snapshot_autoextend_threshold' and 'snapshot_autoextend_percent' define

# how to handle automatic snapshot extension. The former defines when the

# snapshot should be extended: when its space usage exceeds this many

# percent. The latter defines how much extra space should be allocated for

# the snapshot, in percent of its current size.

#

# For example, if you set snapshot_autoextend_threshold to 70 and

# snapshot_autoextend_percent to 20, whenever a snapshot exceeds 70% usage,

# it will be extended by another 20%. For a 1G snapshot, using up 700M will

# trigger a resize to 1.2G. When the usage exceeds 840M, the snapshot will

# be extended to 1.44G, and so on.

#

# Setting snapshot_autoextend_threshold to 100 disables automatic

# extensions. The minimum value is 50 (A setting below 50 will be treated

# as 50).

snapshot_autoextend_threshold = 50

snapshot_autoextend_percent = 50

Merge a Snapshot

To merge a snapshot, the command lvconvert --merge is used. It merges the snapshot with the origin and then remove the snapshot. Both the origin and the snapshot should not be open during this process. You can verify with lsof. If one is open, the process will automatically resume when nothing is using the mountpoint.

If the origin is open, run the command, and verify if the snapshot is still present on the system. Then refresh the VG and activate the LV:

root@ubuntu-local:~# lvconvert --merge /dev/ubuntu-vg/ubuntu-home-snap

Delaying merge since origin is open.

Merging of snapshot ubuntu-vg/ubuntu-home-snap will occur on next activation of ubuntu-vg/ubuntu-lvhome.

root@ubuntu-local:~#

root@ubuntu-local:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

thinpool-lv thinpool-vg twi-aotz-- 500.00m 1.46 10.94

thinvl-1 thinpool-vg Vwi-aotz-- 100.00m thinpool-lv 7.31

ubuntu-lv ubuntu-vg -wi-ao---- <19.00g

ubuntu-lvhome ubuntu-vg Owi-aos--- <10.00g

root@ubuntu-local:~# lvchange --refresh ubuntu-vg

root@ubuntu-local:~# lvchange -ay /dev/ubuntu-vg/ubuntu-lvhome

root@ubuntu-local:~#

The activation is not important in this case, because it’s the /home directory. Even if I unmount /home, I had to reboot my VM.

With Ansible

TODO

RAID, Striped, and Cache

TODO